Google wearable AI can 'learn the language of our bodies'

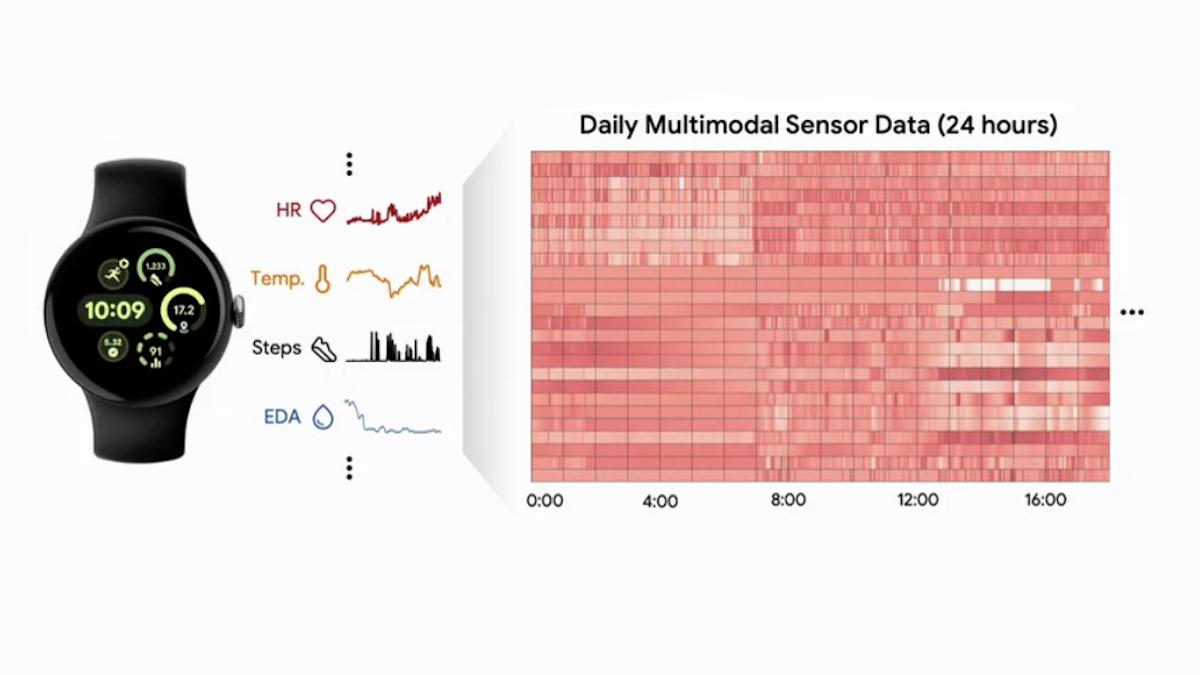

Tech giant Google has developed a new family of sensor–language foundation models that could underpin a coming generation of health and wellness wearable devices.

The SensorLM platform has been trained on 60 million hours of user data and – according to Google – can move the sensor needle from merely providing information on what is occurring on our bodies, to "the crucial context of 'why'."

According to a blog post from Google researchers Yuzhe Yang and Kumar Ayush, an example is that a heart rate monitor can detect if there is a sharp increase, but determining the context – for example, an uphill run or a stressful public speaking event – is generally not possible.

"This gap between raw sensor data and its real-world meaning has been a major barrier to unlocking the full potential of these devices," they wrote, adding that SensorLM bridges that gap, as it can interpret and generate "human-readable descriptions from high-dimensional wearable data."

SensorLM was trained on de-identified multimodal sensory data from more than 103,000 people across 127 countries, over a three-month period, from Fitbit or Pixel Watch devices – both owned by Google – with users' consent.

Turning the rich, continuous stream of raw, fine-grained sensor data from wearable devices into comprehensible insights into human health and behaviour could open up new avenues for clinical decision-making, user engagement, and behavioural interventions, according to a paper on SensorLM published on the ARXIV.org prepress server.

"The SensorLM family of models represents a major advance in making personal health data understandable and actionable," according to Yang and Ayush. "By teaching AI to comprehend the language of our bodies, we can move beyond simple metrics and toward truly personalised insights."

The next stages for the research team are to layer in new types of sensor readings, including metabolic health and sleep analysis data.

"We envision SensorLM leading to a future generation of digital health coaches, clinical monitoring tools, and personal wellness applications that can offer advice through natural language query, interaction, and generation," they said.

The foundational model could also assist Google and partner Samsung in an ongoing rivalry with Apple, currently the leader in the consumer wearables sector with its Apple Watch device, which is also investing heavily in wearable health applications.

Earlier this month, Apple researchers published another paper on arxiv.org that detailed how it aims to apply AI to behavioural data – the way people move, exercise, and sleep – to create future health functions on the Apple Watch.