ChatGPT can cut review times for clinical trial eligibility

Manual screening of patient records for inclusion in clinical trials is a costly and inefficient process, and there have been high hopes that it could be sped up with the use of AI.

In a new study, the large-language model (LLM) ChatGPT was able to reduce the time needed to review whether a patient is suitable for a clinical trial and delivered big reductions – from an average of around 40 minutes per record to just over a minute in some cases.

However, the researchers behind the work say the LLMs still need to be backed up by manual chart reviews as they "have difficulties identifying patients who meet all eligibility criteria."

The team from UT Southwestern Medical Centre in the US used ChatGPT versions 3.5 and 4.0 to look at 35 patients already enrolled into a phase 2 cancer trial, as well as a random sample of 39 ineligible patients, and compared how well the LLMs stood up compared to clinical research staff.

GPT-4 was more accurate than GPT-3.5, though slightly slower and more expensive, according to the researchers, who were led by Dr Mike Dohopolski. Screening times ranged from 1.4 to 3.0 minutes per patient with ChatGPT 3.5, at a cost of 0.02 to $0.03 each, while for ChatGPT 4.0 the ranges were 7.9 to 12.4 minutes and $0.15 to $0.27, respectively.

"LLMs like GPT-4 can help screen patients for clinical trials, especially when using flexible criteria,” said Dohopolski. "They’re not perfect, especially when all rules must be met, but they can save time and support human reviewers."

That time saving could be significant, given that a recent study found that up to 20% of National Cancer Institute (NCI)-affiliated trials fail due to inadequate patient enrolment. This not only inflates costs and delays results, but also undermines their reliability in accurately assessing new treatments.

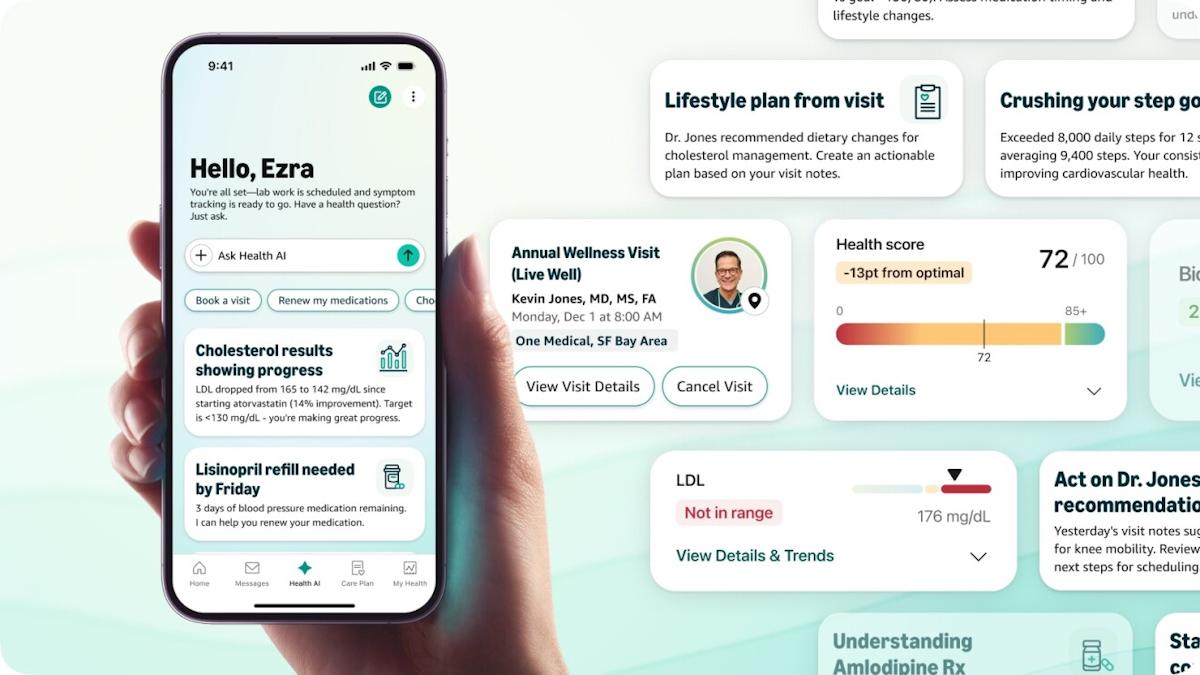

Part of the problem is that valuable patient information contained in electronic health records (EHRs) is often buried in unstructured text, such as doctors’ notes, which traditional machine learning software struggles to decipher, according to the authors of the paper, which is published in the journal Machine Learning: Health.

That means eligible patients may be overlooked because there simply isn’t enough capacity to review every case, they note. Using LLMs could help by flagging candidates for subsequent manual review.

The same research team have worked on a method that allows surgeons to adjust patients’ radiation therapy in real time whilst they are still on the table.

Using a deep learning system called GeoDL, the AI delivers precise 3D dose estimates from CT scans and treatment data in just 35 milliseconds. This could make adaptive radiotherapy faster and more efficient in real-world clinical settings.