An AI age: Utilising advanced technologies in pharma marketing and communications

When it comes to life sciences and artificial intelligence (AI), the conversations are myriad, in terms of drug discovery and development. But what has lagged behind – until now – has been the role it can play more functionally, in pharmaceutical internal processes, marketing, and communications.

In March, the inaugural The Age of AI, Europe – from Canadian events and education organisation PharmaBrands – kicked off at London’s No 6 Aldie Street with the premise that, “for the healthcare marketing and communication community, there will be no opportunity greater than that driven by the adoption of generative AI” (GenAI). Based on this shared belief, more than 50 companies came together to listen to in excess of 20 expert speakers discussing how GenAI is enhancing time to value within the pharma marketing field.

Below are a few of the highlights from the day, and what looks set to be the first of many European stagings for the PharmaBrands event.

AI meets medical: Addressing the health challenges of the modern world

Google’s senior clinical specialist and medical doctor, Dr Dillon Obika presented the three lenses of his personal experience, as patient, clinician, and health technologist. A patient his whole life, born with a congenital heart disease, his professional experience has branched both the practical application of medicine and the more technological side at Google Health.

Focusing on the health challenges of the modern world, despite so much progress, Obika said the ongoing – and in some cases new – problems critically needing to be addressed include: metabolic disease, malignancy, mental health problems, and maternal mortality.

By taking the best efforts of innovation and applying those to that health landscape, however, the tables can be turned. And this is why there is such excitement around AI in healthcare, explained Obika, able to support clinicians, increase patient-centredness, advance life sciences research and development, and scale health delivery.

Furthermore, each new wave of AI builds on what has gone before, That progress has also transitioned from narrow medical applications in ophthalmology, radiology, novel signals, digital pathology, and other areas like dermatology, oscopies, and genomics, to much broader and, so to speak, deeper applications, with Google pushing the boundaries of what’s possible for quite some time now. And partnership, he said, will be a big part of the journey going forward, including discussions around agentic AI.

The evolution of AI: Competitive application for an uncertain tomorrow

Duncan Arbour, senior vice president of insights and innovation at Syneos Health, gave us a taste of what’s coming next, informed by three years of application of GenAI in healthcare communications.

With a ‘Frankie Says Evolve’ t-shirt on, Arbour’s delivery added character to an already colourful topic. Running through the interestingly huge focus on avatars in the industry, he covered the single-use case early days and stated simply that LLMs are not for different versions of email marketing – it’s for writing code. Indeed, from mid-2023 onwards, Syneos’ mantra was ‘Don’t write prompts, write Python’.

With all this evolution, Arbour admitted that everything he is currently proud of is going to be ‘Toy Town’ within six months. His shared learnings, however, were:

- The only question now is ‘Where first?’ With reasoning models, OpenAI, and DeepSeek, the possibilities henceforth include MLR/compliance, clinical document creation, PICO and extractions, and transforming abstracts.

- The last mile is the slowest. By April he wanted to see 50% of those in the room writing code with LLMs... But writing real code is like being an artist, he warned, while using LLMs is an IKEA method: “It’s quick, it’s cheap, and you don’t get emotionally attached to it.” And that last mile? It’s value creation and value capture at an enterprise level.

- It’s software’s social media moment. Arbour predicts that there will be a shift from the top-down model of Apple’s ‘there’s an app for that,’ and it will become a case of ‘there’s your own app for that.’ Generative AI is a talent issue, not an IT issue, he says.

- There’s no I in Team. Be selfish; there are two ‘u’s in automation. Develop tools for you to do your job the way you want it done.

- This is not a drill. This is the emergency. Rhetorically asking whether the world had changed in the 825 days (as of 4th March) since the launch of ChatGPT, Arbour warned that there are but five years left to change things. In a world without a map, the best compass is code: “In a Hunger Games world, everyone in the room is in competition with everyone else to be the last standing. Be selfish: the only use case that counts is you.”

Any revolution takes a determined, innovative persistence

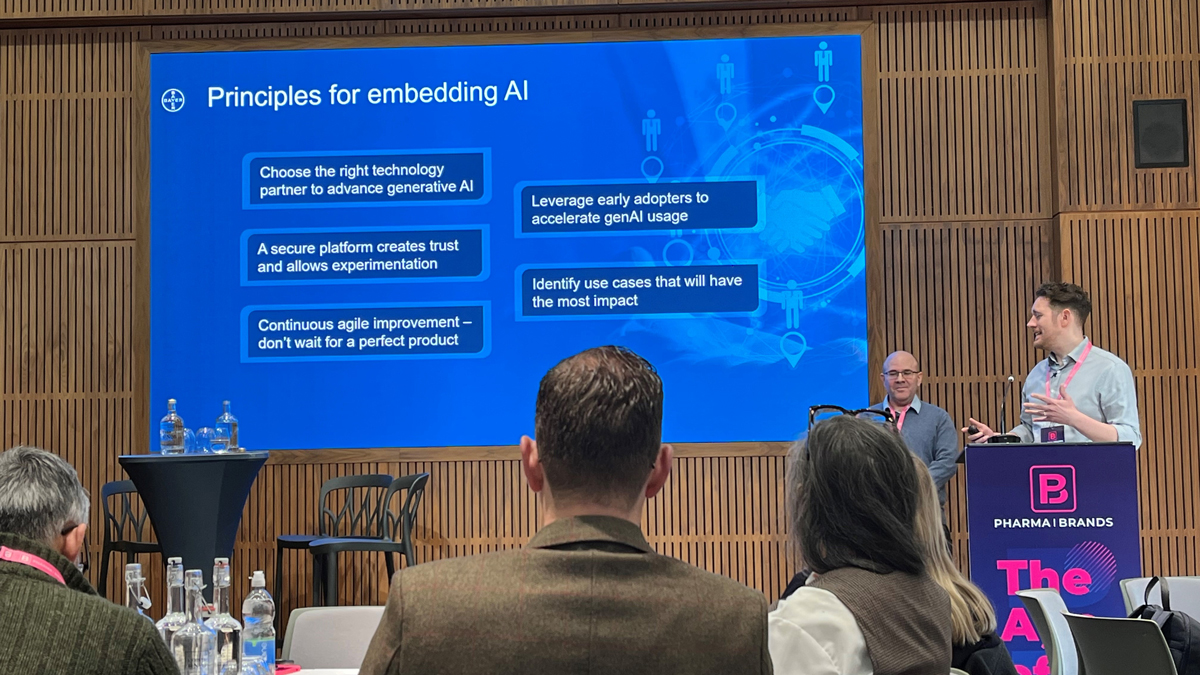

Bayer’s Dr Guy Doron – senior insight solutions lead – and Ritch Hutchings, oncology senior commercial insights and business translator spoke of Bayer’s journey in the ‘AI revolution’ and how tools can be moved to collaborative AI solutions with HCPs.

Running through myGenAssist – their GenAI OS – they advised that the way to circumvent the ‘classic tech-adoption curve’ is to build a local community of enthusiasts, with the objective of creating a team who can become force-multipliers for AI across the business.

All photographs courtesy of Nicole Raleigh

‘Enthusiastic’ certainly could be one adjective levied at Novo Nordisk’s Pernille Kjaempe, AI communication lead of global communications, who asked, “Is it possible to create a GenAI solution that costs only 1% of what most people might expect?”

In 2021, she was hired to boost future communication through digitalisation. Cue a period of late night YouTube self-upskilling in ‘emerging technologies’ and the first idea that came to mind was the Spring 2022 Tone of Voice Converter; that is to say, turning heavy scientific materials into layman’s terms. And in 2025? Custom chatbots with an agentic approach at scale.

However, in response to her enthusiasm, the IT centre told Kjaempe they do AI for drug discovery, for early development, KPIs – but not for corporate communications. Nonetheless, Kjaempe kept knocking on doors. Early attempts with (non-confidential!) material in Google Cloud worked well, but of course she and her team needed to be more compliant. So it was that the AI Content Creator came into existence. And her learnings? The cruciality of change management and clear guidelines.

Compliance is key in pharma – it’s not a case of Campbell’s Soup or Starbucks

Julie Turnbull, senior vice president of science and regulatory at Klick Health, went deeper into scaling innovation while maintaining compliance. Her talk covered how to leverage AI for faster, smarter content and claims management, discussing how content generation and consumption has changed dramatically over the years – from personalisation to dynamic content, algorithms, segmentation, content velocity and/or content supply chain, and GenAI.

“Compliance is key in pharma – it’s not a case of Campbell’s Soup or Starbucks,” explained Turnbull. MLR reviewers have a tough enough job and they need support, from approved core claims to dynamic submissions to submission metrics.

So, what does AI change in marketing? Well, CMOs have reported positive impact from GenAI just six months after launch, she shared. And early adopters are seeing a huge amount of impact, with an increase in response rate and reduction in deployment costs. In short, it is addressing pretty much everything – except pharmaceutical compliance review.

The MLR challenge: How GenAI accelerates time to approval at scale

A panel addressing this MLR challenge included: Rakesh Kantaria, founder, Circuit Medical; Will Fraser, head of marketing UK, Roche; Robin Jones, digital brand manager, AstraZeneca; and Gareth Worthington, head of global clearance, UCB.

Worthington explained that the amount of effort needed to build a claims library is massive and MLR needs to “stop being a beast everyone is afraid of and need to cram things through.” Rather, it should “occur as close to the customer as possible and as fast as possible.” Jones agreed that “MLR still serves as the number one bottleneck to serving customers effectively and efficiently,” and suggested it is a matter of collaboration with brand teams and workforce knowledge regarding the tools, as well as medical teams, as “the MLR use case requires a high degree of certainty that it is doing the job it is needed to do.”

Fraser’s onus was on data quality, but oftentimes it requires focus on “a small group of enthusiastic people to show value.” He added: “It is not groundbreaking change management, but it is equally not overpromising or overhyping or promising perfection from day one. Get your hands dirty and get going with it. That is the proof point.”

For Jones, three foci are “pioneers, process, and propagation.” In other words, he said, “it’s about finding pioneers in the different areas who can shares their experiences, use cases, to bring about change.”

Academia and the health system: Educating the nation

Professor David J Lowe, clinical director of innovation at the University of Glasgow and clinical lead for health innovation in the Scottish Government on NHS implementation, focused on the workforce factor challenge when it comes to successful implementation of AI.

The Digital Health Validation Lab exists to validate the value of new technologies for the health service, questioning how to build successful clusters from universities and get them into the health system – education of the nation being a fundamental preventative method, he insisted.

The potential applications of AI in healthcare include, of course, drug discovery and development, but also operational efficiency and resource management, diagnostics and imaging, predictive analytics and risk assessment, clinical documentation and workflow automation, treatment planning and personalisation, patient monitoring and remote care, and medical translation and communication.

Prof Lowe also emphasised the criticality of real-world evidence (RWE), including for clinical applicability, and ran through their healthcare AI value pyramid:

The takeaway? The wide applicability of optimisation of care through speed amelioration via AI implementation. Touching upon the NICE Evidence Standards Framework, which now includes evidence requirements for AI and data-driven technologies with adaptive algorithms, as with all of the talks during this inaugural event Prof Lowe noted current blockers to successful adoption: leadership, coordination, evidence, consistency, scalability, and money.

This served as preamble to the panel, ‘Remain Relevant: Align with AI Applications in Hospitals’, moderated by Prof Lowe and involving Dr Arpit Srivastava, GP and clinical director, Brunel 5 Primary Care Network, and member of NICE Technology Appraisals Committee, and Dr Monique Vekeria, consultant radiologist and co-founder of messageGP, NHS.

It takes years to train a doctor and AI is a solution for filling that gap and providing efficiency and effectiveness, explained Dr Vekeria. However, Dr Srivastava described the current scenario as ‘fascinating’: the NHS is in crisis and these tools can help, but the barrier is “we can’t put a life vest on – there is no time.” Another key barrier, he said, is getting people to trust AI.

In Dr Srivastava’s own surgery or consultation service, he won’t type one note down – there is an ambient AI listening to the whole consultation and at the end of the consultation it will type it all up with the exact structure he wants. And so this permits him the ability to look at the patient and not just input into a screen. That, he shared, should be a basic standard of care and not Gold Standard: “The mere ability to look, human to human, at a patient.”

How, then, will AI take things forward? For Dr Srivastava, it is a case of going backwards in a way, to the essential nature of medicine. Nonetheless, it’s ever a matter of numbers and workload, his manager asking him, ‘How many more appointments can you get into your clinic now?’, and that must not be the outcome of AI implementation, he warned.

In radiology, Dr Vekeria explained that, while AI helps "unpack" scans, its primary benefit is time-saving that enables additional services. Both agreed on the urgent need for regulatory and ethical frameworks, noting that since AI output still requires verification, "the true time saving is still debatable." Further evolution is needed before realising AI's full potential.

Omnichannel (yes), and successful, legal execution of GenAI

Managing director of CCI Life Sciences, Dr Paul Tunnah moderated a third panel, ‘Omni-Channel Victory: A Behind-The-Scenes Deep Dive on Generative AI Successful Execution’, joined by: Emma Harris, global digital innovation lead, Ferring; Nico Renner, product manager of oncology, BeiGene; and Emma Vitalini, head of global medical digital strategy, Amgen.

It’s all about using data to tell a story, insisted Harris: “We talk about omni and right message, right channel, right time – it’s supporting the employee to give the best experience to the HCPs and by extension the patients.”

And when it comes to AI, it has been ‘sprinkled’ across pretty much all that’s done in pharma, said Tunnah, and Harris – who mentioned that she loved “Arbour’s aspiration, but it’s not reality” – deemed omnichannel “something that can bring groups together,” but advised that it should be built “piece by piece and [brought to] where people are working.”

It is a matter of augmentation, rather than any notion of replacement. While Ferring have “been on a longer journey to play catch up, in some respects,” she also shared that, “it’s about playing smarter, not harder […] It’s about the opportunity to look forwards and know who comes in at which juncture. And proving value to the organisation.”

Harry Jennings, partner at VWV, meanwhile, provided a highly interesting navigation of the legal landscape and the intellectual property, ethical, and legal implications of GenAI in commercial activity.

“Who invited the lawyer?” he joked, adding that lawyers are misunderstood because they point out risks. But it’s not to stop the fun, rather to go in with open eyes.

From a business perspective, if you have an AI project and you need a use case, a business case, the budget signed off, and to make the thing actually happen – legal runs through all this. The best thing is to start thinking legal issues early on, he advised, spotlighting four key legal considerations for AI:

- Do you own it?

- Are you allowed to use it?

- What standards do you need to meet?

- If a problem occurs, who pays?

Input data is highly important, obviously. You either have the data already, or you go and find it somewhere else. If it’s not yours, you should consider whether you have the rights to use it for your project, Jennings said. And if you can’t identify access terms for your particular dataset, you need an agreement in place to give you the right. This data is often messy, unstructured, and diverse in form – so, there’s this preliminary project just getting the data ready for AI.

All this information was to state that there will be lots of relationships in any AI project: contracts, promises, rights, standards, reliance, and support. “Don’t try doing this without a contract!” he warned, still seeing this happen and still surprised every time.

In all the above relationships, ideally you want to be looking at a contract and seeing nice clear IP drafting – in reality, though, this is wishful thinking. Which loops back to risk analysis. With a ‘new-ish’ sector like AI, said Jennings, things are still being worked out and there are certain concepts – reasonable care, for example – where you don’t necessarily know what that means technically and/or legally. Is there something independent and objective that can be pointed to as a defence? If someone asks the reason and reasonableness behind an approach or reliance and purpose – list the standards, the experts: “Evidence in short, and evidence is something that pharma understands.”

AI in pharma – what could go possibly wrong? Well… From accuracy and bias to injury, third party rights, performance and availability, privacy and security, and ethics/reputation – the answer is, a lot. Safety is the biggest issue. AI in clinical decision processes? That certainly scares Jennings. The simplest question in these matters is, ‘Can it hurt someone?’

There are people who are nervous about the coming AI apocalypse, he noted. To him, that means ethical issues and safety issues will be spotted quickly and highlighted for the world to see: “Don’t be the bad example. Sit closely to ethical standards,” he advised, adding that, “there is very detailed guidance on the EU Data Act Prohibited list – a flavour of what you shouldn’t do!”

He saved third party rights, “the best for last,” he mused. If using a pre-trained system, seek what it was trained on, what data, and how. Did the developer have the rights to the data? The answer is no for LLMs, for example. Did ChatGPT negotiate with all the websites around the world and get the licenses for scraping their content? No, it didn’t. It’s a question of copyright, basically, and changes are on the horizon. Therefore, the overall takeaway was: proceed with a cautious approach.

About the author

Nicole Raleigh is pharmaphorum’s web editor. Transitioning to the healthcare sector in the last few years, she is an experienced media and communications professional who has worked in print and digital for over 20 years.

Supercharge your pharma insights: Sign up to pharmaphorum's newsletter for daily updates, weekly roundups, and in-depth analysis across all industry sectors.

Click on either of the images below for more articles from this edition of Deep Dive: Market Access and Commercialisation 2025