Tech & Trials: What’s all this about AI, then?

Welcome to Tech & Trials – a guided tour to the world of AI and clinical trials.

This column aims to demystify artificial intelligence (AI) and present practical examples of the technology in action. We hope you’ll not only become more informed, but more confident in how AI can help you, your team, and your organisation.

With that, let’s dive into what AI is all about.

Classic AI and the logic of Sudoku

AI involves leveraging software to do cognitive tasks: basically, tasks that require thinking. A spreadsheet isn’t AI – you put in data and formulas. The software that runs your ATM isn’t AI either – it’s just straightforward calculations. But if your software translates text from one language to another, or drives a car, it’s AI! It’s a task that requires thought.

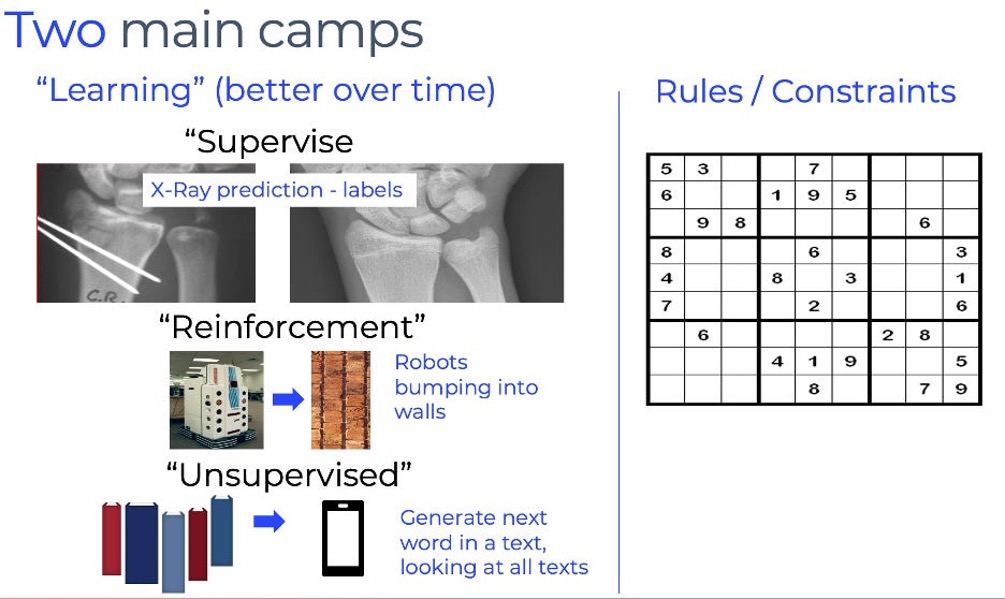

In general, there are two schools of AI: classic AI and machine learning. In classic AI, cognitive tasks are modelled (e.g., turned into software) using techniques such as logic and “constraint based reasoning”. These are complicated rules (more complicated than an ATM) that in combination allow the software to perform sophisticated mental tasks.

As an example, consider a Sudoku puzzle. (Here’s a good primer). In Sudoku, you must use the numbers 1-9 to fill puzzle squares such that no row, column, or mini-3x3 square repeats one of those numbers. They are hard and fun!

Simple rules aren’t sufficient to have a program solve these, but by combining logic and “constraint-based reasoning” (e.g., no number can repeat in a row) they become solvable, automatically. That’s classic AI. The key is that we’ve translated our human thinking into constraints and logical rules for the software to leverage.

The other school of AI – machine learning (ML) – learns from data instead.

ML can learn directly from data, without human interference. Or it can learn from examples that we specifically compose. Sometimes, it can learn from the mistakes it makes. We consider this all data, just in different forms (e.g., examples, mistakes, etc.), and so, when an AI learns from data, rather than us providing the rules or logic, we call it “machine learning”.

Unsupervised vs. supervised ML

When the algorithm uses data all by itself, we say it’s “unsupervised” machine learning. A common unsupervised example is text message auto-complete. Here, the AI predicts the next word you’re going to type, based on the previous words. If a model considers every text message you’ve ever sent, it might predict that 86% of the time, after you type “Oh my gosh!” you will then type, “lol!” – this is a cognitive task (predict the next word) using just data itself. Hence, it’s “unsupervised.”

Alternatively, when we provide examples to the AI, we call it “supervised” machine learning. For instance, consider an AI that classifies an X-ray as “broken” or “not broken”. To do this, we would provide example X-rays of both broken and not broken bones. The AI would learn to differentiate based on these examples.

AI, chess, and Go

Finally, the model can learn from its own mistakes, due to the data, which we call “reinforcement” machine learning. Think of a Roomba vacuum wandering around the house – it bumps into walls, and hopefully learns not to bump into that wall again. Reinforcement learning can even be used for chess, since each move can be “scored”. The AI starts moving pieces randomly and scoring the move: if it’s a good scoring move, it will consider that move next time; if it’s a bad move, it won’t. This is (very roughly) how Google’s AI became amazing at Chess and Go and even predicting protein folds.

The crucial difference between machine learning and classic AI is that machine learning can “get better”. Constraints are limited, since we define them. But machine learning improves with more data or examples.

This insight leads us to the current hot-topic: Deep Learning.

What if we could use huge volumes of data? What is the limit on what we can model?

Deep Learning uses a machine learning technique called a neural network, which is basically set of “weights” (numbers) that are combined into “layers”. Think of each weight like a person yelling their opinion, where their volume conveys their conviction. A layer is then the grouping together of all those voices into some overall assessment (“the yesses are pretty loud!”).

Suppose an X-ray of an arm comes in: some people loudly yell, “It’s broken” and some quietly say, “Maybe it’s broken” and a few scream, “It’s not broken.” The layer combines these voices, such that, overall, the group consensus is that the X-ray generates a bit-louder-than-normal assessment of “It’s broken.”

During “Training” the algorithm assesses which yellers were wrong and which were right about different X-rays (where we know the answer ahead of time, if it’s supervised). If the person yelled the right assessment, we believe that person a bit more. If they were wrong, we shush them a bit. We do this over and over, and eventually the correct people yell the loudest each time.

This example is supervised (since we gave example X-rays), but we can use Deep Learning in unsupervised ways, too. Consider the predict-the-next-word task. If the algorithm sees, “I love” and some people yell “cats” and others quietly whisper “dogs” and someone even says “platypus” – well, we can look over all the previous text messages and see who was right and who was wrong, and promote (or shush) accordingly.

Deep Learning’s magic hinges upon the insight that more data leads to better learning, so AI researchers devised ways to generate massive neural networks (billions of yellers, across many layers) and feed them volumes of data (like, most of the internet!). This allows the models to do amazing things because it can learn all sorts of context (surrounding words) and their relationships.

With our predict-the-next-word example, if the model looks at all of your texts, if you said, “I love ___”, it would be more probable that the blank is “you”, rather than “broccoli”.

But, if it looks at the entire internet, and has a massive neural network to capture all those relationships, it can learn eerily precise predictions.

Consider, the most common fill-in-the-blank:

If I say, “The sky is ___”, for the next word you’ll probably say “blue”.

If I say, “It’s gloomy and raining and the sky is ___” and I ask you for the next word, you’ll probably say “gray”.

In short, context matters!

Now, imagine if the AI uses the entire internet as context, it can do unbelievable things, beyond fill-in-the-blank; such as write computer code (or funky haikus).

While amazing, though, there are still things AI can’t do (well). One area it struggles with is reasoning. For instance, even the most advanced AI still has a hard time determining if two circles in a picture overlap. But this is largely because we don’t yet know how to give it that type of data to learn from (in the way that we can feed it the internet to learn about text).

Keep an eye out for the next column: Can AI save us from our own data deluge?