AI breast cancer screening 'not accurate enough to replace humans'

There is not enough evidence yet to allow artificial intelligence based breast cancer detection to be fully integrated into screening services, says a UK study.

The analysis of a dozen studies of AI-powered image recognition software for breast cancer screening – published in the British Medical Journal – has found that the technology isn't yet reliable enough to replace radiology specialists.

The researchers behind the study, from Warwick University, based their conclusions on studies carried out since 2010 that collectively included mammogram data from more than 131,000 patients in the US, Sweden, Germany, the Netherlands and Spain.

Previous research has suggested that AI systems outperform humans and might soon be used instead of experienced radiologists but the analysis found that on the whole they were of "poor methodological quality."

"Claims have been made that image recognition using AI for breast screening is better than experienced radiologists and will deal with some of the limitations of current programmes," they write.

However, "overall, the current evidence is a long way from the quality and quantity required for implementation in clinical practice." The work was commissioned by the UK National Screening Committee.

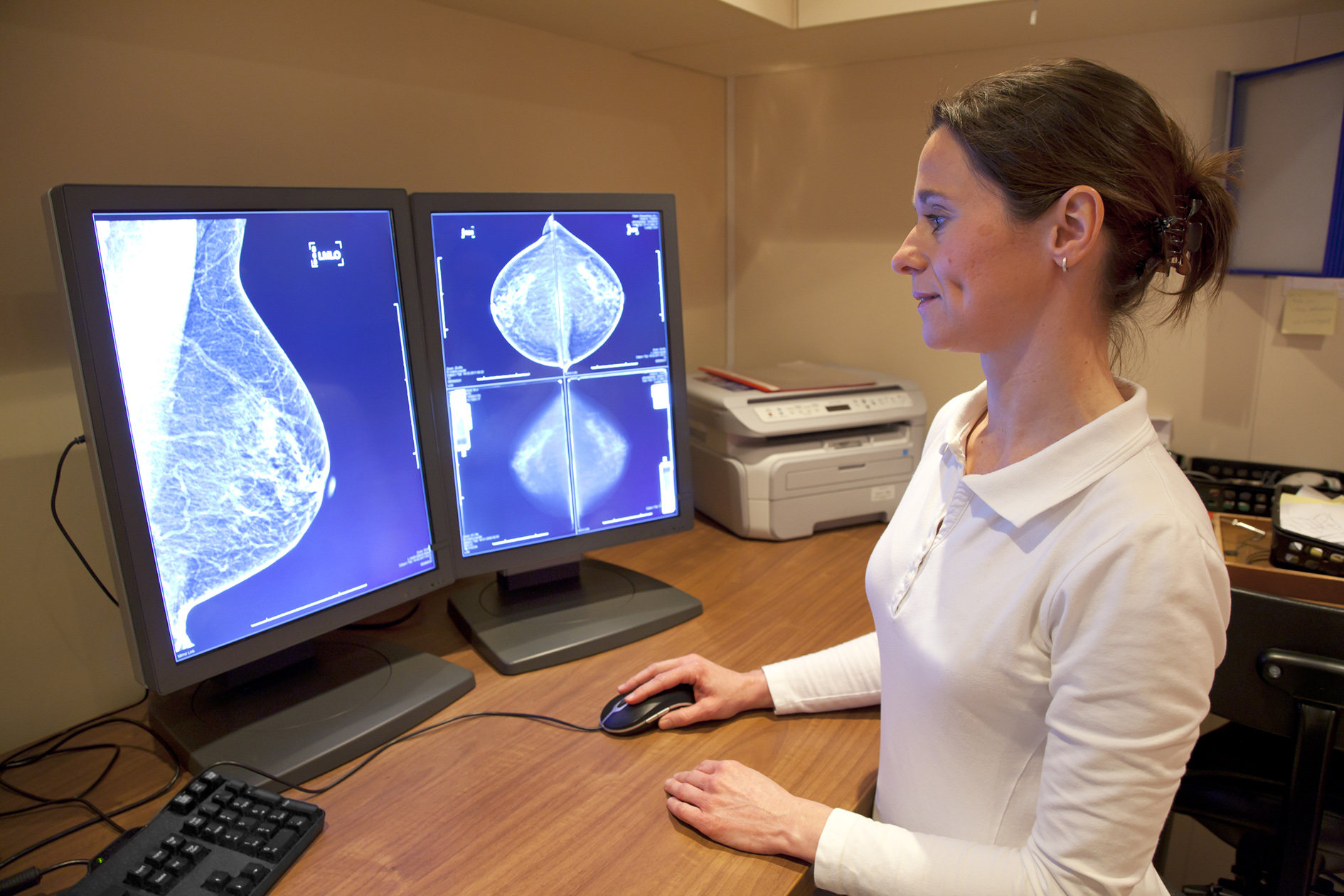

Breast cancer is a leading cause of death among women worldwide and many countries have introduced mammography screening programmes to detect and treat it early. However, examining mammograms for early signs of cancer is high volume repetitive work for radiologists, and some cancers are missed.

Three large studies accounting for almost 80,000 subjects compared AI systems with the clinical decisions of the original radiologist, but the majority (94%) were less accurate than a single radiologist, and all were less accurate than the consensus of two or more radiologists – the standard practice in Europe.

In contrast, five smaller studies involving just over 1,000 women found that all of the AI systems were more accurate than a single radiologist, but were at high risk of bias and their results not replicated in larger studies.

In three studies, AI used for triage screened out 53%, 45%, and 50% of women at low risk – but also 10%, 4%, and 0% respectively of cancers detected by radiologists.

Evidence suggests that the accuracy and spectrum of disease detected between different AI systems is variable, according to the researchers, although they acknowledge that AI algorithms are constantly improving, so reported assessments might be out of date by the time of study publication.

"Well designed comparative test accuracy studies, randomised controlled trials, and cohort studies in large screening populations are needed which evaluate commercially available AI systems in combination with radiologists in clinical practice," they conclude.